Testing cloudflare D1

Published at 2024-3-12

Cloudflare D1

There seems to be a new “serverless” database option every few months, theyre mostly just a cloud managed SQL instance with automatic management and scaling. There’s also NoSQL… I’m not really sure they offer much benefit over hosting on a VPS for most use cases.

I did really like planetscale though, but they’ve recently removed there free tier and made a minimum of $40/month entry its no longer an option for me.

I’ve also started using Cloudflare “pages” to deploy small sites, like this one. I’ve found it less burdensome than AWS lambda and the other 15 acryonmys you need to configure to get it to work,

With this i’ve been using Cloudflare D1 - cloudflare “serverless” database offering. Which is efficetlvey a slightly modified sqlite database that runs in something called a “durable object”

It’s a bit different to Planetscale ect in that you don’t get a mysql connection string instead you “bind” the database to a pages project or a worker function (Cloudflare Lambda equvilent)

Then you can run querys against the sqlite database just like you would with a locally hosted sqlite database.

It’s quite easy to set up, its got reasonable pricing, a generous free tier, its also fairly quick to query, its got some weird backup type thing with some AWS like name timemachine? or maybe timetravel.

Issues

I wrote this before D1 became “generally available”, decided it was unfair, deleted it. D1 became GA and not much has changed so here it is.

You can only use within cloudflare ecosystem, there is a REST API it is unusably slow, if for some reason you wanted to access it ouside of cloudflare, you would be best off re-implementing a HTTP API in a cloudflare worker, I don’t know why you would want to do this though…

The main issue with it is your limited to 10gb per database so if you think your going to need to store more than that it isn’t really an option currently. Lots of people are quite confused by this and if you look at the cloduflare discord, theres just a series of people going is it really 10gb? over and over again.

Conceptually the cloudflare team suggest sharding the database to enable more storage, as you can currently have 50k databases (and apparently more on request). Suggesting sharding methods such as per user or per region, however there just isnt a good way to shard D1 at the moment. Currently to make a new database and to enable it to be used by a pages project or a worker, the pages project or worker will need to be re-deployed. This clearly doesen’t work for a per user sharding method You would also have to manage the sharding yourself and come up with a sensible way of implementing schema migrations…

Its also single region at the moment, although thats difficult to establish from reading about D1, as blog posts from 2022 on Cloudflare’s website suggest it support read replication. In fact its a little hard to work out a lot of the featurs of D1 without stalking a discord server.

Its a little bit misleading, I looked at D1 thinking that read replication was enabled from the start, and if you go on the Discord (which is where you need to go to decode D1’s features) you will find i’m not the only one.

It also doesen’t auto-scale, the abovementioned read replicas aren’t operational and as D1 is modifed SQLite in a durable object, your limited by the performance of a durable object, which are only single threaded and if you do some digging can handle about 300rps.

But that’s fine because we know the limits of cloudflare D1 right? Well no. In fact if you ask on the discord you’ll get a slightly crypytic response

Anyway lets do some testing to find out, theres a few hints about what the limits will be.

Anyway lets do some testing to find out, theres a few hints about what the limits will be.

Well it transpires that each D1 database is stored in a single durabal object, so are limited by the capabilities of the durabal object.

In the CF docs we can find that “Each individual Object is inherently single-threaded. An individual Object has a soft limit of 1,000 requests per second.” It also mentions that this will vary on the load.

We can also look in the docs at the queues product (also built upon DO) and see that the max limit is about 400rps

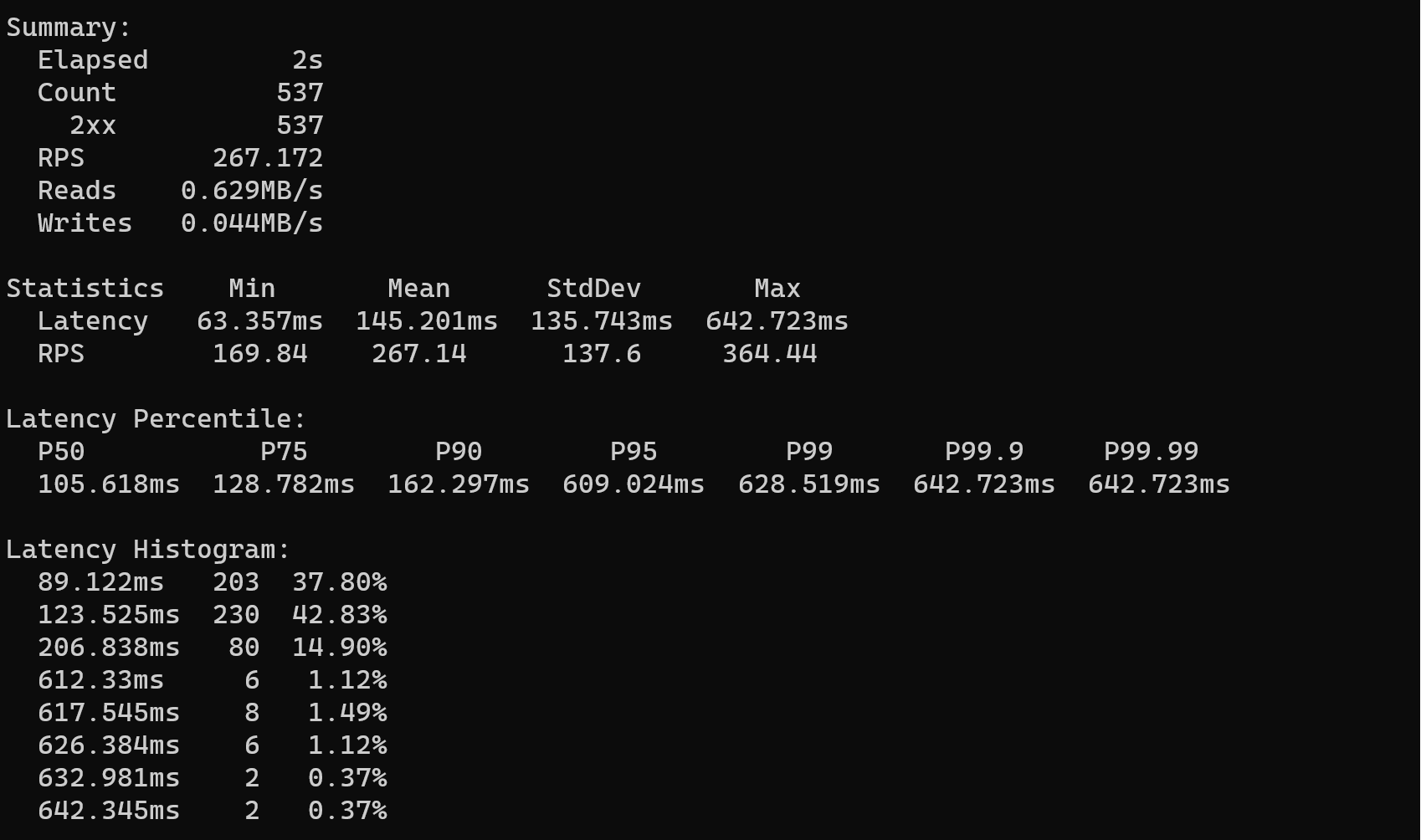

Simple testing from my local machine writing a single 1kb row, CF manages 267 RPS for 2 seconds,

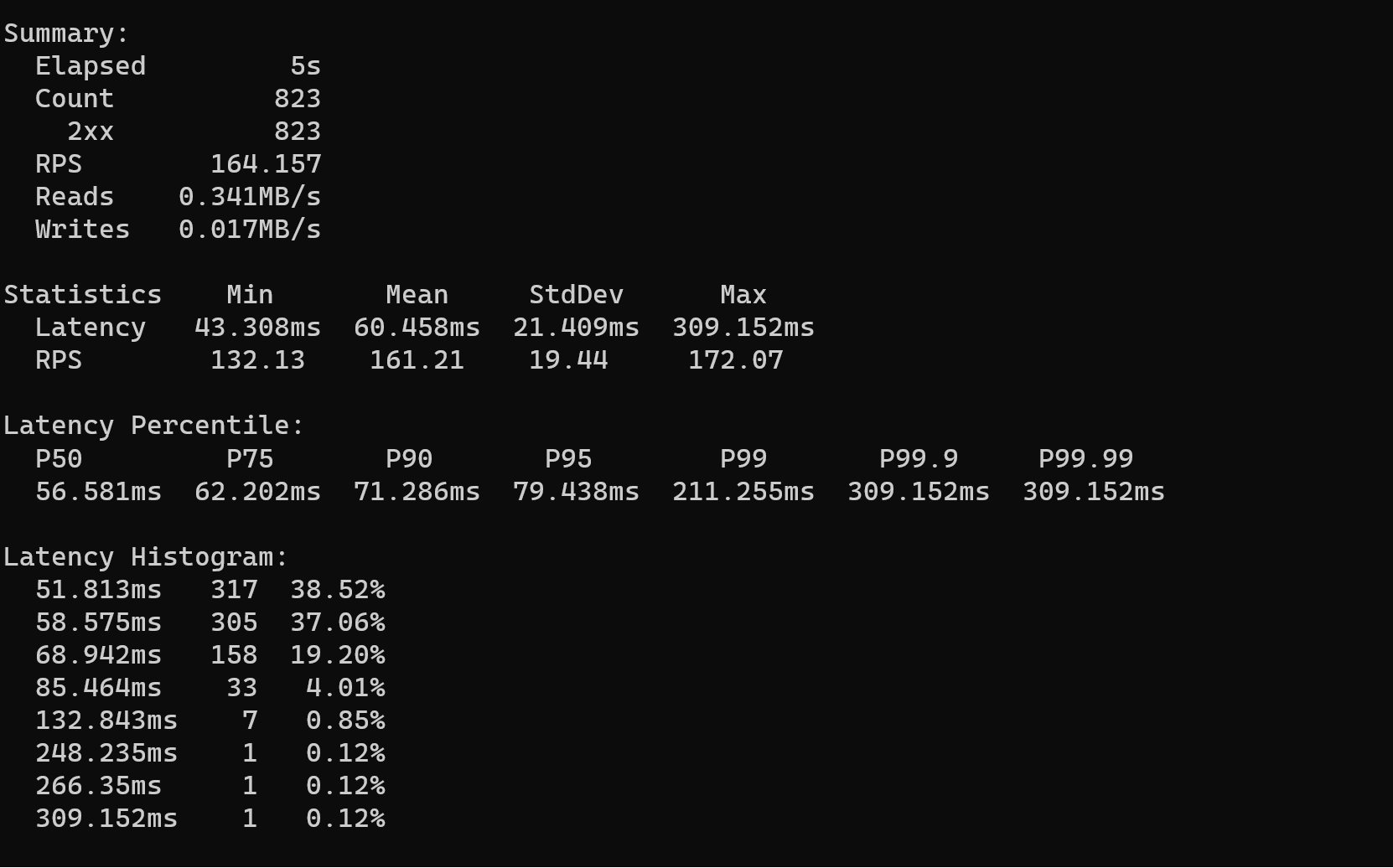

And for reads, this one is doing a count(*) on a ~ 10k table

I was strugling to test for longer as I hit I think I hit a ratelimit and just got 500 returned… I moved to using loader.io to see if it CF was limiting me as I was coming from a single host

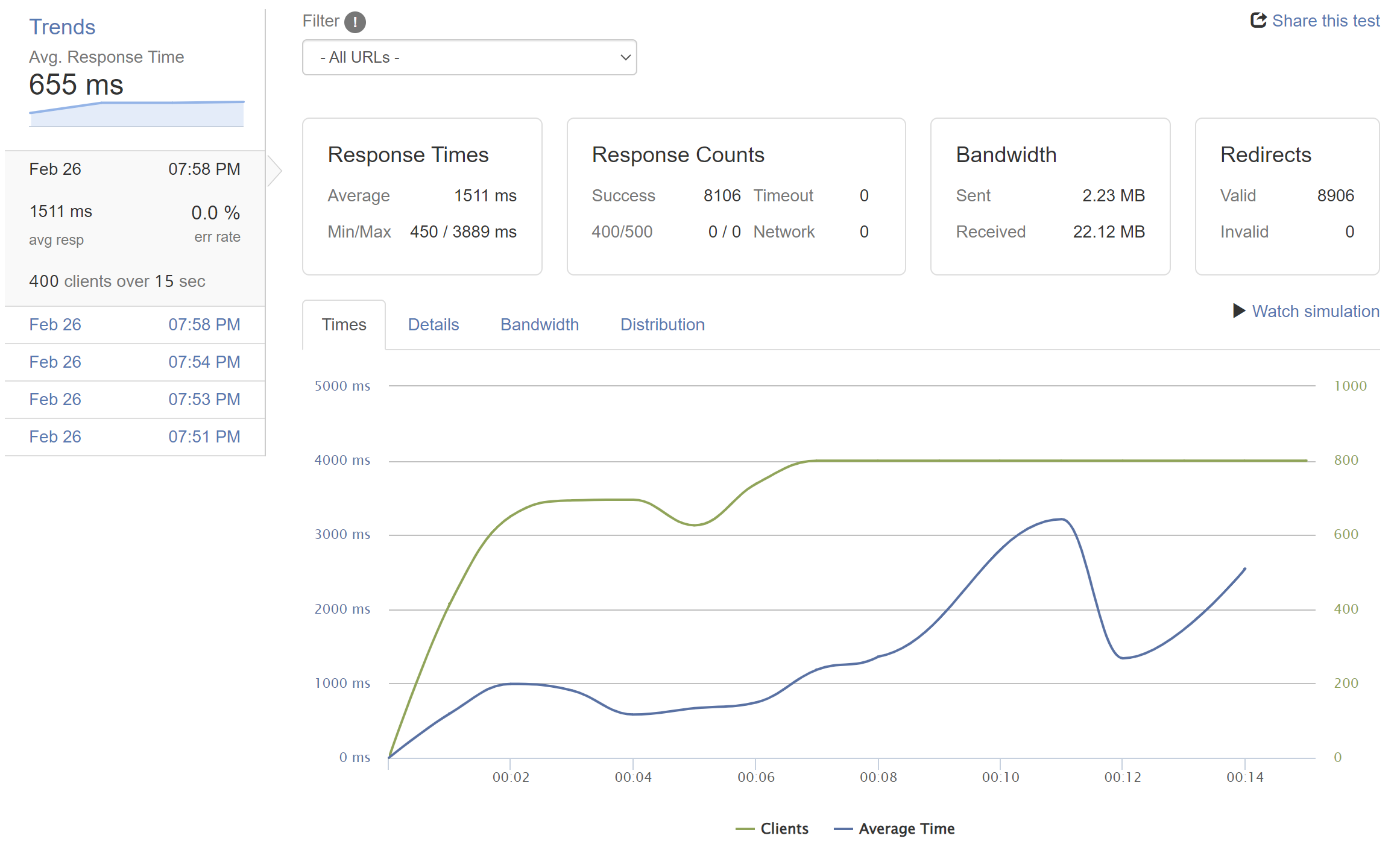

Loader.io writes (the loader.io servers are in the US hence the increased latency)

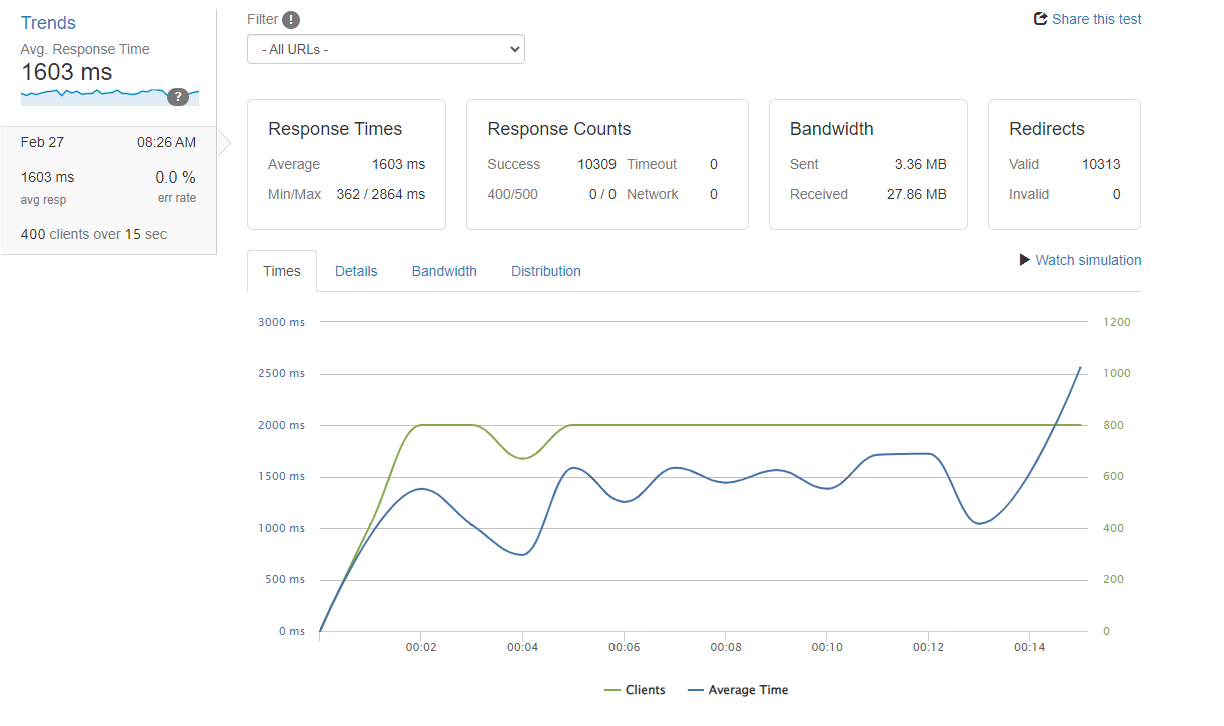

This next test is a repeat of the single write ~ 1kb row, 400 clients ~ 400 rps, it’s strugling here with response times increasing to over a second.

Overcoming the issues

If your using the cloudflare ecosystem, you can try to mitigate the limited database storage and the auto-scaling aspect by sharding.

I don’t think anyone sane would implement there own sharding method… I mean thats what Vitess is for? But you can.

Really simple sharding

Lets say we have an application that allows people to sign up and then store notes. We could implement it in the following way, we could have a user database which would contains tables with the user details and then we could have “notes” database’s.

Say we anticipate 1m users, and each user takes up 1kb, that would take up roughly 1gb to store, that would be fine. But what if 1m users stored 1m notes and each note could be up to 1mb? Yeah not going to work so well.. that would be about 10gb.

We could get round this by making a new database per user, but for the reasons mentioned above this isn’t currently possible.

So instead we could say make 20 databases called notes and then when we register a new user we would randomly pick of one of the 20 databases to stores that users notes in, we would record this by assinging a shardid to the user’s row.

And then when we get a request from that user we would use the shardid to select the right database.

Sounds straightforward? and it is, I made an example of this although I got bored after making 5 notes databases…